How Humanoid Robots Use Computer Vision to Navigate

Sponsored by Robot Center, Robots of London, and Robot Philosophy

The integration of computer vision technology with humanoid robotics represents one of the most significant advances in modern robotics. As these sophisticated machines become increasingly prevalent in industries ranging from healthcare to hospitality, understanding how they perceive and navigate their environment becomes crucial for businesses looking to implement robotic solutions.

The Foundation of Robot Vision

Computer vision serves as the digital eyes of humanoid robots, enabling them to interpret and understand visual information from their surroundings. Unlike traditional industrial robots that operate in controlled, predictable environments, humanoid robots must navigate complex, dynamic spaces filled with obstacles, people, and constantly changing conditions.

The computer vision system in a humanoid robot typically consists of multiple components working in harmony. High-resolution cameras, often stereoscopic pairs, capture visual data from multiple angles simultaneously. These cameras are strategically positioned to provide comprehensive coverage, mimicking the human visual system’s ability to perceive depth and spatial relationships.

Modern humanoid robots utilize sophisticated sensor fusion techniques, combining visual data from cameras with information from LiDAR sensors, ultrasonic sensors, and inertial measurement units (IMUs). This multi-sensor approach creates a robust perception system that can function effectively even when individual sensors face limitations.

Advanced Image Processing Algorithms

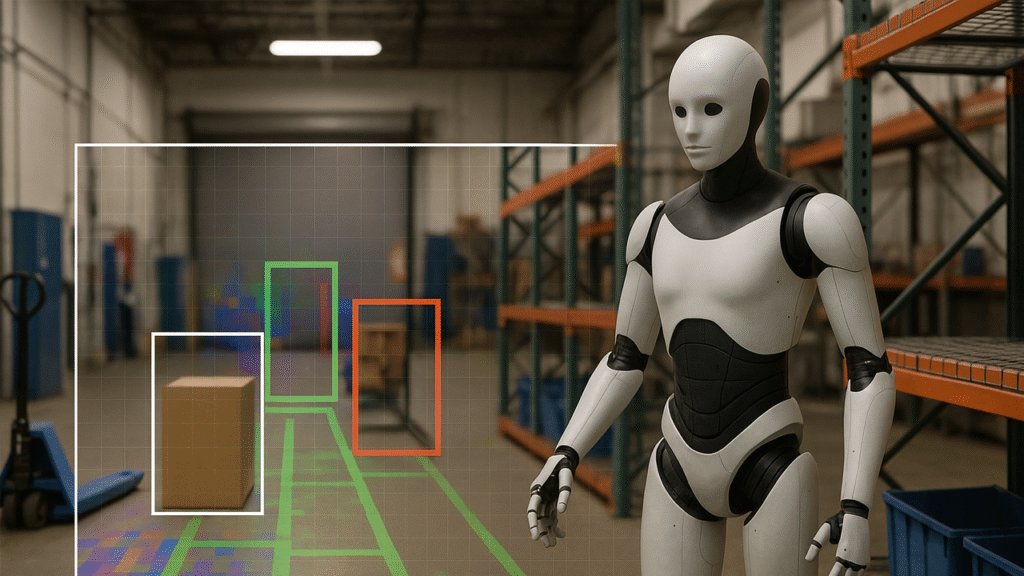

At the heart of robot navigation lies complex image processing algorithms that transform raw visual data into actionable information. These algorithms perform several critical functions simultaneously, beginning with object detection and recognition. Using deep learning neural networks trained on massive datasets, robots can identify and classify objects in real-time, distinguishing between static obstacles, moving people, and navigable pathways.

Simultaneous Localization and Mapping (SLAM) algorithms represent another cornerstone of robot navigation. These sophisticated systems enable robots to build detailed maps of their environment while simultaneously tracking their position within that space. Visual SLAM techniques use camera data to identify distinctive features in the environment, creating landmark-based maps that persist even as the robot moves through different areas.

The processing power required for these operations is immense. Modern humanoid robots employ specialized hardware including Graphics Processing Units (GPUs) and dedicated AI processing chips to handle the computational demands of real-time computer vision. These systems process thousands of frames per second, making split-second decisions about navigation paths and obstacle avoidance.

Depth Perception and Spatial Understanding

One of the most challenging aspects of robot navigation involves accurate depth perception and spatial understanding. Humanoid robots employ several techniques to achieve this capability, with stereo vision being among the most common approaches. By analyzing the slight differences between images captured by two cameras positioned at known distances apart, robots can calculate the depth of objects and obstacles with remarkable precision.

Time-of-flight cameras and structured light systems provide additional depth information, particularly useful in environments with poor lighting or limited visual contrast. These technologies emit infrared light patterns and measure the time required for light to return to the sensor, creating detailed depth maps of the surrounding environment.

Advanced computer vision systems also incorporate semantic understanding of spatial relationships. Rather than simply detecting objects, modern robots understand the context and meaning of what they observe. They recognize that chairs are typically positioned around tables, that doorways provide passage between rooms, and that stairs require different navigation strategies than flat surfaces.

Dynamic Obstacle Avoidance

The ability to navigate around both static and moving obstacles represents a critical capability for humanoid robots operating in human environments. Computer vision systems continuously monitor the robot’s surroundings, identifying potential collision hazards and calculating alternative pathways in real-time.

Motion tracking algorithms analyze the trajectory of moving objects, predicting future positions to enable proactive avoidance maneuvers. This predictive capability is particularly important when navigating around people, as human movement patterns can be unpredictable and require sophisticated behavioral modeling.

Path planning algorithms work in conjunction with obstacle detection systems to identify optimal routes through complex environments. These systems consider multiple factors including path length, energy efficiency, safety margins, and the probability of encountering additional obstacles along different route options.

Machine Learning and Adaptive Navigation

Modern humanoid robots leverage machine learning techniques to continuously improve their navigation capabilities. Through experience and feedback, these systems learn to recognize patterns in their environment and adapt their behavior accordingly. Reinforcement learning algorithms enable robots to optimize their navigation strategies based on successful and unsuccessful attempts at reaching destinations.

Neural networks trained on diverse datasets allow robots to generalize their navigation skills across different environments. A robot trained to navigate office buildings can apply similar principles when operating in hospitals, retail spaces, or residential settings, adapting to new challenges while maintaining core navigation competencies.

Edge cases and unusual situations present ongoing challenges for robot navigation systems. Machine learning approaches help address these scenarios by enabling robots to learn from each unique encounter, building a more comprehensive understanding of possible navigation challenges and appropriate responses.

Integration with Human-Robot Interaction

Navigation systems in humanoid robots must account for the social aspects of operating in human environments. Computer vision systems incorporate social awareness algorithms that recognize human behavioral cues and adjust robot movement patterns to maintain appropriate social distances and respect human comfort zones.

Gaze detection and intention recognition capabilities allow robots to anticipate human movement patterns and respond appropriately. When a person looks in a particular direction or begins moving toward a specific location, advanced vision systems can predict likely trajectories and adjust robot navigation accordingly.

Communication through movement represents another important aspect of socially-aware navigation. Humanoid robots use subtle changes in posture, speed, and direction to signal their intentions to nearby humans, creating more natural and comfortable interactions in shared spaces.

Real-World Applications and Case Studies

The practical applications of computer vision-enabled navigation in humanoid robots span numerous industries and use cases. In healthcare settings, robots navigate complex hospital environments, delivering medications and supplies while avoiding patient areas and respecting privacy concerns. Their vision systems must accurately identify medical equipment, navigate around beds and wheelchairs, and respond to the dynamic nature of healthcare environments.

Retail and hospitality applications showcase the customer service potential of well-navigated humanoid robots. These systems guide customers through stores, provide information about products and services, and maintain appropriate social interactions while moving through crowded spaces. Their navigation systems must balance efficiency with customer comfort and safety.

Manufacturing and warehouse environments present unique navigation challenges, with robots operating alongside human workers in spaces filled with machinery, inventory, and constantly changing layouts. Computer vision systems in these settings must maintain high accuracy while operating in industrial conditions with varying lighting and environmental factors.

Technical Challenges and Future Developments

Despite significant advances, computer vision navigation in humanoid robots faces ongoing technical challenges. Lighting variations can significantly impact camera performance, requiring adaptive algorithms that function effectively across different illumination conditions. Reflective surfaces, transparent objects, and complex textures can confuse traditional computer vision systems, necessitating increasingly sophisticated detection algorithms.

Computational efficiency remains a critical concern, as real-time navigation requires processing vast amounts of visual data with minimal latency. Battery life considerations in mobile humanoid robots place additional constraints on the computational resources available for computer vision processing.

Weather and environmental conditions pose additional challenges for robots operating in outdoor or variable indoor environments. Rain, snow, dust, and temperature fluctuations can impact sensor performance and require robust system designs that maintain navigation capabilities under adverse conditions.

The Role of 5G and Edge Computing

The emergence of 5G networks and edge computing infrastructure offers new possibilities for humanoid robot navigation. High-speed, low-latency communication enables robots to offload intensive computer vision processing to nearby edge servers, reducing onboard computational requirements while maintaining real-time performance.

Cloud-based mapping and navigation services allow robots to access detailed environmental information and share navigation experiences with other robots in the same network. This collaborative approach accelerates learning and improves navigation performance across entire robot fleets.

Distributed intelligence systems enable multiple robots to coordinate their navigation efforts, sharing sensor data and path planning information to optimize collective movement through complex environments.

Professional Robot Services and Consultation

The complexity of implementing computer vision navigation in humanoid robots requires specialized expertise and professional guidance. Organizations considering robotic solutions benefit from comprehensive consultation services that assess specific operational requirements and recommend appropriate technological approaches.

Professional robot recruitment services help organizations identify and acquire the right robotic systems for their unique applications. Expert consultants evaluate environmental factors, operational goals, and integration requirements to ensure successful robot deployment and ongoing performance optimization.

For businesses ready to explore the potential of humanoid robots with advanced computer vision navigation, professional consultation and recruitment services provide essential support throughout the evaluation, selection, and implementation process. Contact SALES@ROBOTSOFLONDON.CO.UK or call 0845 528 0404 to book a consultation and discover how advanced robot navigation technology can transform your operations.

Conclusion

Computer vision technology has revolutionized humanoid robot navigation, enabling these sophisticated machines to operate effectively in complex human environments. From advanced image processing algorithms to machine learning-enabled adaptive behavior, modern robots possess remarkable capabilities for understanding and navigating their surroundings.

As these technologies continue to evolve, we can expect even more sophisticated navigation capabilities, improved human-robot interaction, and expanded applications across diverse industries. The future of humanoid robotics lies in the continued advancement of computer vision systems that can match and eventually exceed human perceptual capabilities in navigating the complex world around us.

The successful implementation of these technologies requires careful planning, expert guidance, and ongoing support to ensure optimal performance and return on investment. Professional consultation services provide the expertise necessary to navigate the complex landscape of robotic technology selection and implementation.

This article is sponsored by:

Robot Center – Your premier destination for robot purchasing, consultation, and robotics consultancy services. Expert guidance for all your robotic needs.

Robots of London – Leading provider of robot hire, robot rental services, and robot event solutions. Bringing cutting-edge robotics to your events and operations.

Robot Philosophy – Comprehensive robot consultancy and recruitment services, robot advice, insights, and innovative ideas. Led by RoboPhil (Philip English), a renowned Robot YouTuber, Influencer, Trainer, Consultant, and Streamer in the robotics industry.